What is Carbon-aware Computing?

Why does computing matter for climate?

Computing’s climate impact has rapidly escalated—from a niche concern to a global crisis. By 2040, the information and communications technology (ICT) sector is projected to produce 14% of global carbon emissions1 — more than aviation, and nearly half of today’s entire transportation sector.

These emissions don’t just come from running servers. They include the full lifecycle of our digital infrastructure: manufacturing, transporting, and deploying everything from chips to server racks to entire data centers. And as demand for AI and cloud services explodes, that footprint is only growing.

Despite the rise of renewable energy, the surge in data center construction is pushing utilities to build new natural-gas power plants2 — infrastructure that often lasts 30 to 40 years. Each new plant risks locking in fossil fuel use for decades and delaying the transition to cleaner alternatives.

Meanwhile, policy has barely caught up. As the report Where Cloud Meets Cement3 reveals, data centers are booming with little regulation around siting, energy sourcing, or community impact.

In short: digital infrastructure is shaping the energy system of the future—and without intentional action, it’s steering us in the wrong direction.

So how can we reduce the carbon footprint of computing?

The Tripod of Sustainable Computing

To reduce the climate impact of computing, we need to address two major sources of emissions:

- Embodied emissions — the carbon released during the manufacturing, transportation, and disposal of computing hardware.

- Operational emissions — the carbon generated every time we power, cool, and run that hardware throughout its life.

Together, these form the foundation of a three-pronged framework for sustainable computing—a tripod that balances:

- Hardware efficiency (minimizing embodied emissions)

- Energy efficiency (reducing the amount of energy used)

- Carbon awareness (optimizing for the carbon intensity of that energy)

This tripod helps us understand where action is most impactful—and where innovation is urgently needed.

Reducing Embodied Emissions (Hardware Efficiency)

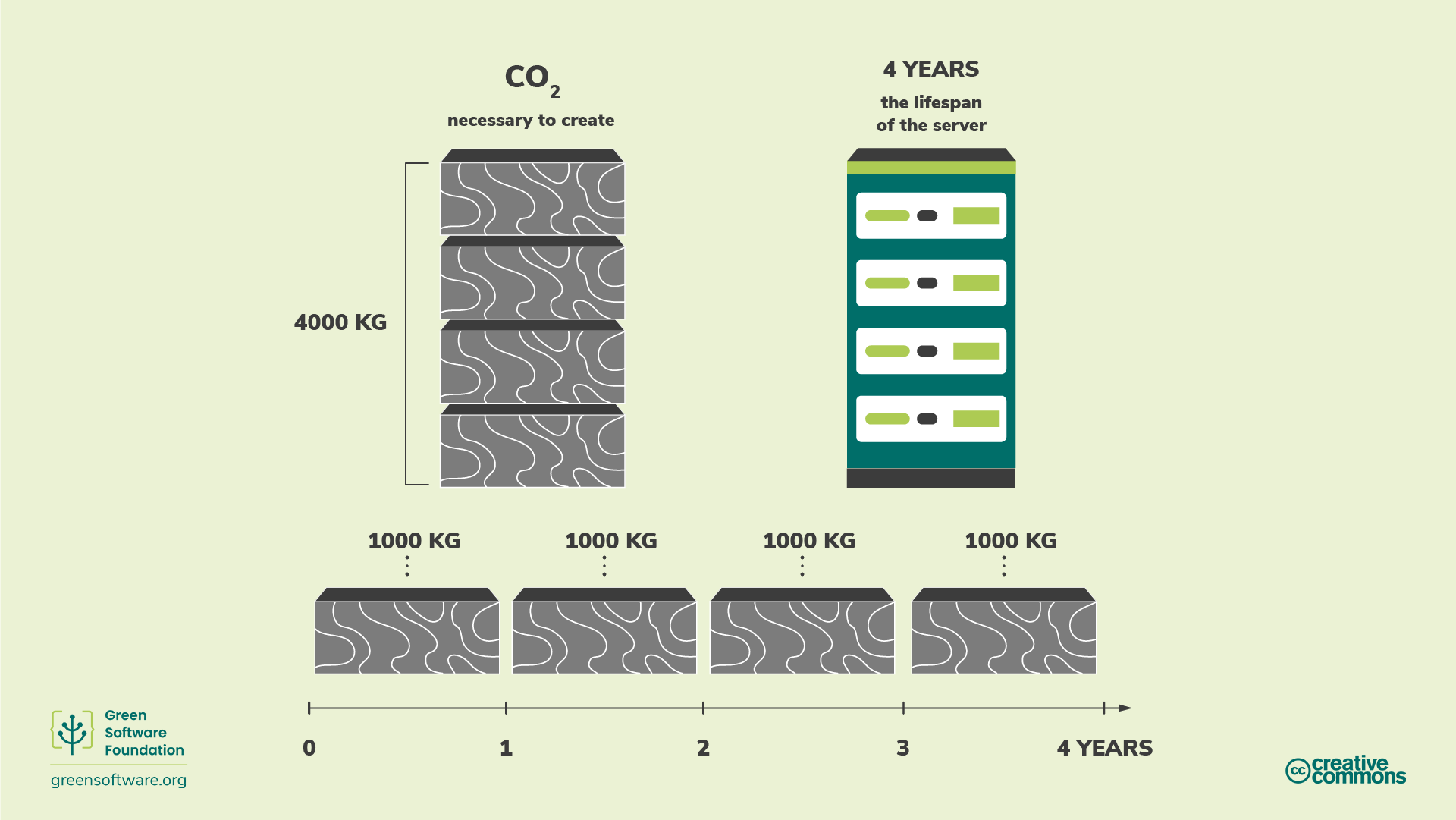

The carbon cost of hardware is largely front-loaded—released during manufacturing, transportation, and disposal. To measure and reduce this cost, we focus on amortization: dividing the total embodied emissions by the total amount of compute work the hardware will perform over its lifetime.

This gives us a simple formula:

If the embodied carbon is fixed, the only way to reduce this ratio is by increasing the total useful work the hardware performs. In other words, we can reduce embodied emissions by:

- Improving utilization — ensuring hardware is used more efficiently, more of the time

- Extending lifespan — keeping hardware in productive use for longer

By maximizing the compute we extract from each server, chip, or rack, we lower its per-unit climate impact.

Reducing Operational Emissions (Energy Efficiency and Carbon Awareness)

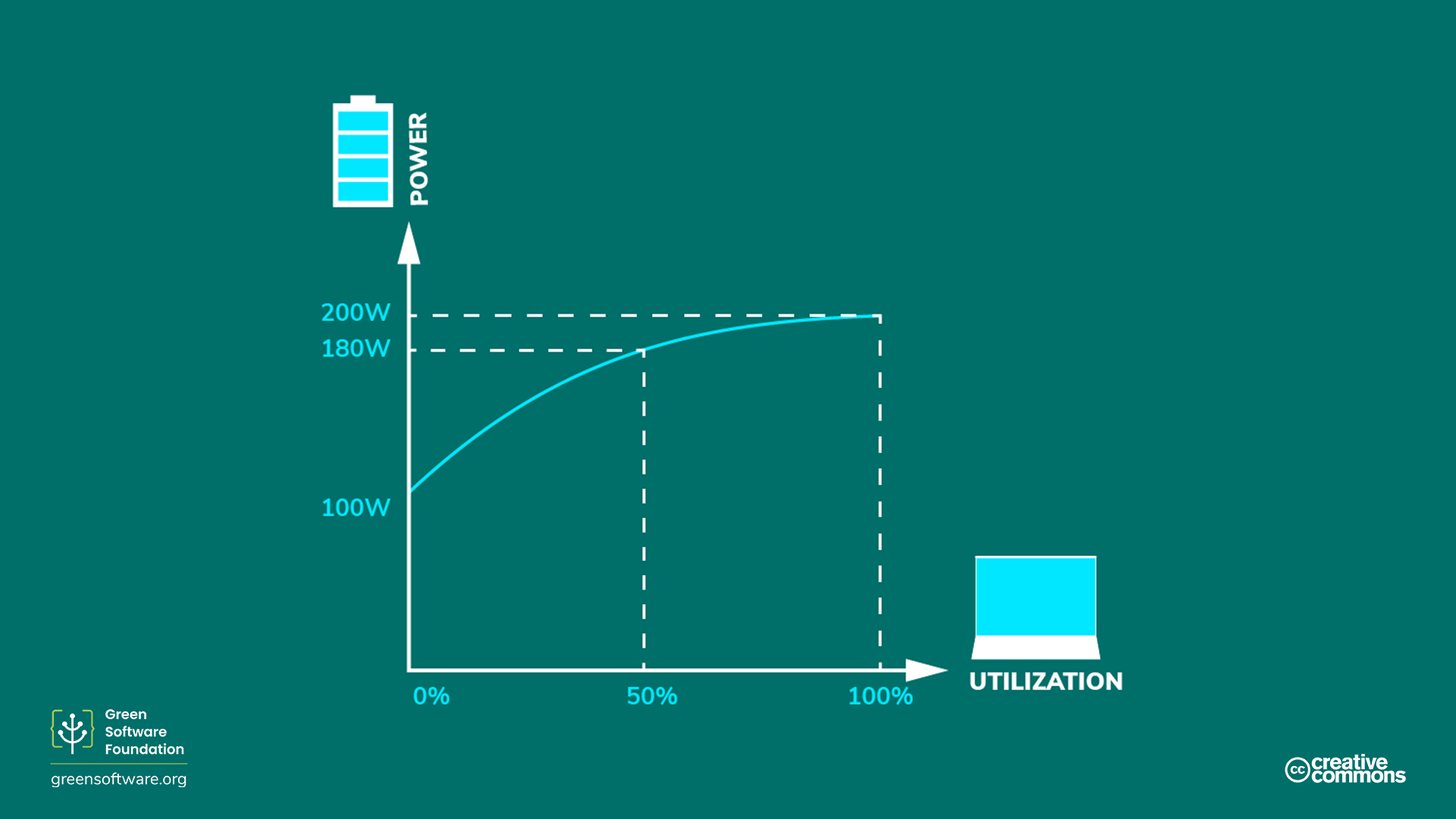

Operational emissions arise from the electricity used to run and cool hardware. These emissions are calculated as:

To reduce this footprint, we can optimize two levers:

- Energy efficiency — using less energy to perform the same work

- Carbon awareness — choosing to consume energy when and where it is cleanest

From an engineering perspective, energy efficiency means right-sizing resources, avoiding idle workloads, and selecting energy-efficient hardware. These steps often reduce both emissions and cloud costs, making them win–win interventions.

But perhaps the biggest opportunity lies in carbon-aware design. By choosing when and where we run our workloads, we can reduce operational emissions by as much as 90%. To unlock that potential, we first need to understand carbon intensity—what makes one kilowatt-hour "cleaner" than another?

Understanding Carbon Intensity

Carbon intensity measures how clean or dirty a unit of electricity is. It’s defined as:

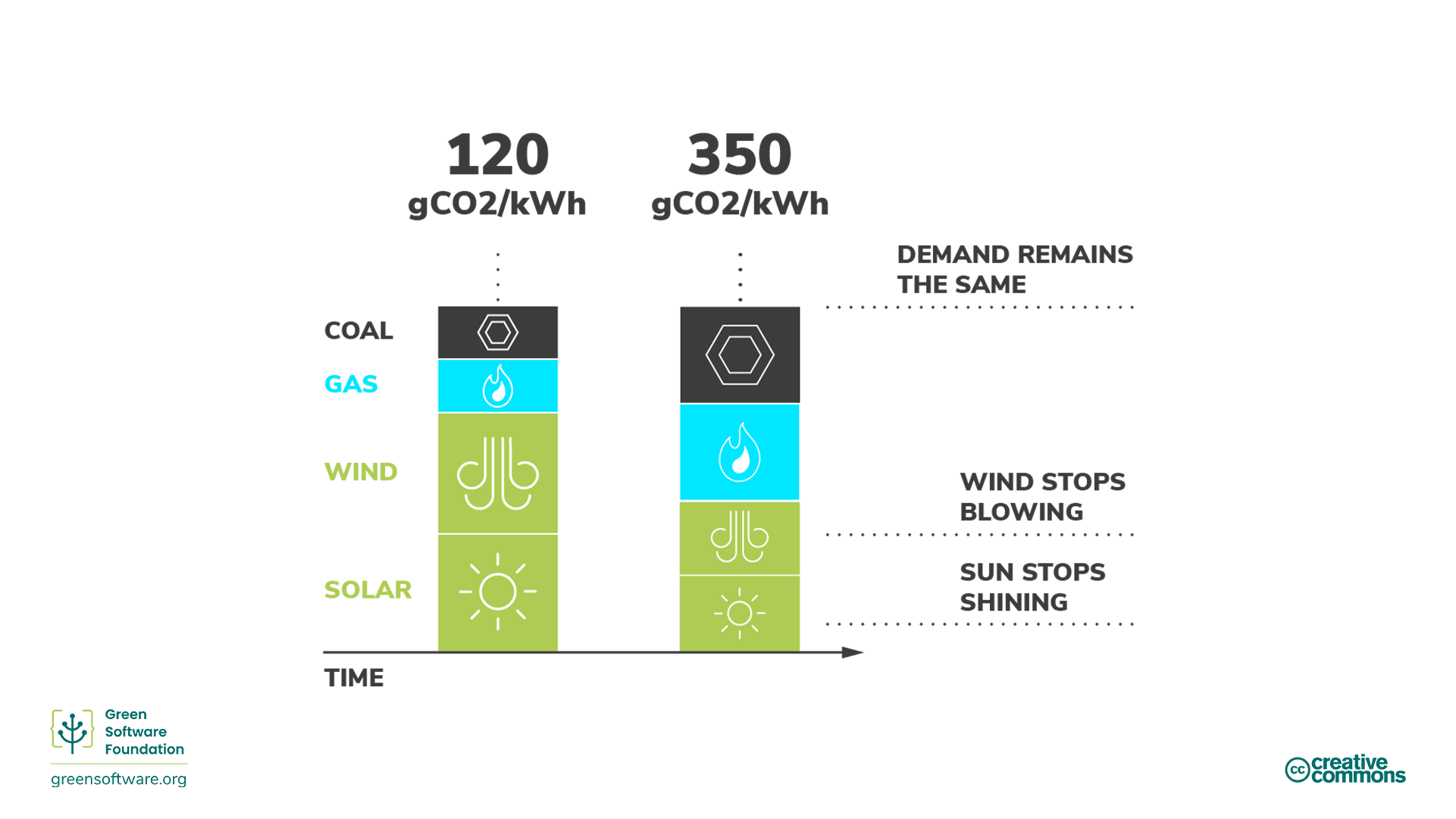

This value depends on the mix of energy sources on the grid at any given time. Fossil fuels like coal and natural gas release far more carbon per kWh than renewables like wind, solar, or hydro. The more fossil fuels in the mix, the higher the carbon intensity.

Crucially, carbon intensity is not static. It varies:

- Over time — Even within a single day, the grid’s energy mix can swing dramatically. On a sunny afternoon, solar may dominate. At night, the grid may fall back on natural gas.

- By region — Each location has a different baseline mix of generation. One region may be hydro-heavy, another wind-rich, and another powered largely by coal.

These temporal and spatial shifts mean that the same computation can have radically different carbon footprints depending on when and where it runs.

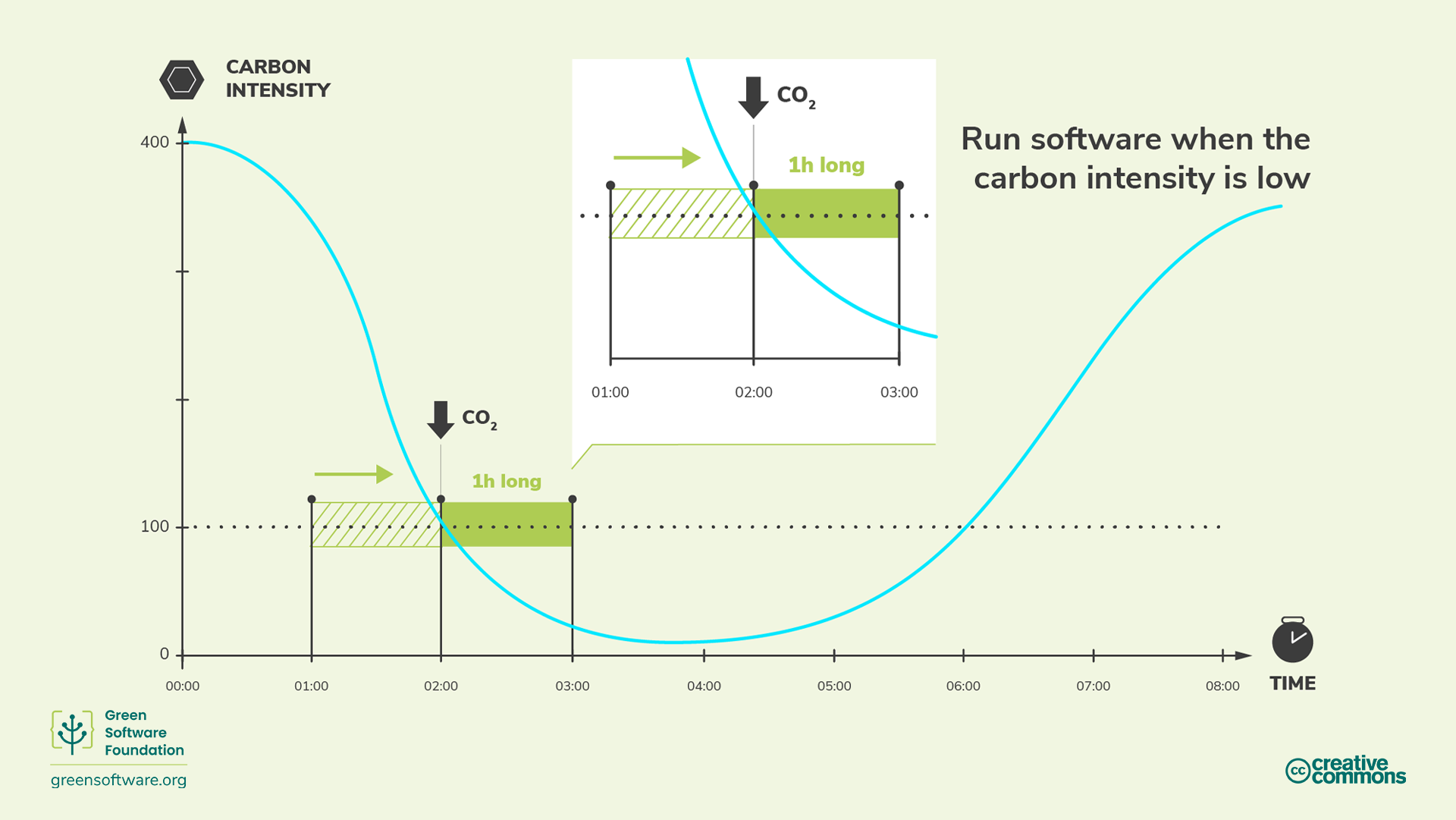

That’s the power of carbon awareness: by aligning compute with cleaner energy, we can slash emissions—without changing the workload itself.

What makes software carbon aware?

Carbon-aware software is designed to minimize emissions by adapting when and where it runs—aligning compute with cleaner energy.

There are two main strategies:

- Temporal shifting — scheduling workloads at times when the carbon intensity of electricity is lowest

- Spatial shifting — running workloads in locations where electricity is cleaner

Both approaches require some workload flexibility:

- For temporal shifting, the workload must tolerate brief delays—waiting for greener energy windows

- For spatial shifting, it must tolerate being executed in different geographic regions

Temporal shifting is typically cost-free and easy to implement in batch or deferred workloads. Spatial shifting may involve tradeoffs, such as data residency constraints or increased network costs.

But when the workload allows it, these shifts can unlock massive emissions reductions—without touching the core logic of the software.

A Carbon-aware Computing Framework

Putting it all together, a carbon-aware computing framework helps developers answer one key question:

How can I run this workload in a way that minimizes emissions without compromising performance or cost?

Such a framework brings together:

- Forecasted carbon intensity data

- Workload flexibility metadata (e.g., deadlines, regions, SLAs)

- Integration points in schedulers, orchestrators, or cloud runtimes

By combining these inputs, a carbon-aware system can automatically decide when and where to run each task to minimize its carbon footprint.

This framework doesn’t replace existing tooling — it enhances it. The goal isn’t to reinvent the wheel, but to make sustainability a first-class concern in software infrastructure.

That’s the core challenge CarbonAware is tackling.

We’re building developer-first integrations that make it easy to shift workloads to lower-carbon times and places. CarbonAware currently supports Airflow and Prefect, with upcoming support for Kubernetes Jobs and CronJobs, Metaflow, and SkyPilot.

If you’re building climate-conscious software — or just want to reduce your cloud footprint — we’d love to collaborate. Learn more at carbonaware.dev.